筆記Andrew Ng:Machine Learning Week2

筆記Andrew Ng:Machine Learning Week2

- 一、Linear Regression with Multiple Variables

一、Linear Regression with Multiple Variables

Welcome to week 2! I hope everyone has been enjoying the course and learning a lot! This week we’re covering linear regression with multiple variables. we’ll show how linear regression can be extended to accommodate multiple input features. We also discuss best practices for implementing linear regression.

We’re also going to go over how to use Octave. You’ll work on programming assignments designed to help you understand how to implement the learning algorithms in practice. To complete the programming assignments, you will need to use Octave or MATLAB.

As always, if you get stuck on the quiz and programming assignment, you should post on the Discussions to ask for help. (And if you finish early, I hope you’ll go there to help your fellow classmates as well.)

(1) Multivariate Linear Regression

Multiple Features

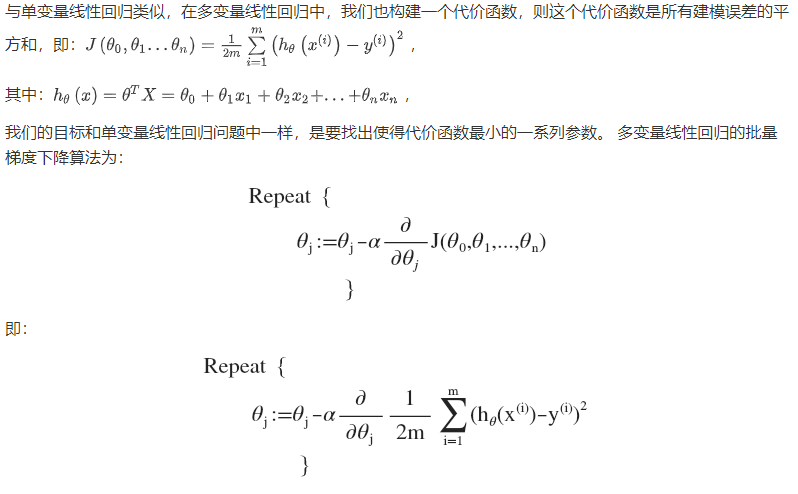

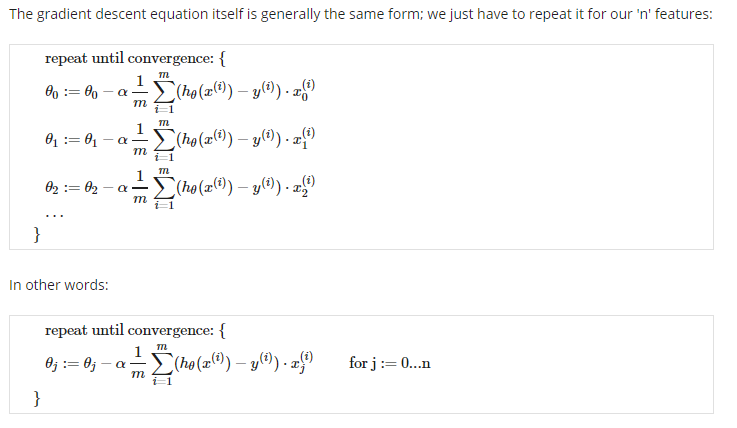

Gradient Descent for Multiple Variable

求導后得到:

(simultaneously update θj for j=0,1,…,n)

python:compute Cost Function

import numpy as np

def computeCost(X, y, theta):

inner = np.power(((X * theta.T) - y), 2)

return np.sum(inner) / (2 * len(X))

Gradient Descent in Practice 1 - Feature Scaling

We can speed up gradient descent by having each of our input values in roughly the same range.

This is because θ will descend quickly on small ranges and slowly on large ranges,

and so will oscillate inefficiently down to the optimum when the variables are very uneven.The way to prevent this is to modify the ranges of our input variables so that they are all roughly the same.

Ideally: -1<=x(i)<=1 or -0.5<=x(i)<=0.5

These aren’t exact requirements; we are only trying to speed things up.

The goal is to get all input variables into roughly one of these ranges, give or take a few.Two techniques to help with this are feature scaling and mean normalization.

two techniques

- feature scaling

Feature scaling involves dividing the input values by the range

(i.e. the maximum value minus the minimum value)

of the input variable, resulting in a new range of just 1.

- mean normalization

Mean normalization involves subtracting the average value for an input variable from the values

for that input variable resulting in a new average value for the input variable of just zero.

Gradient Descent in Practice 2 - Learing rate

Debugging gradient descent

Make a plot with number of iterations on the x-axis.

Now plot the cost function, J(θ) over the number of iterations of gradient descent.

If J(θ) ever increases, then you probably need to decrease α.

Automatic convergence test

Declare convergence if J(θ) decreases by less than E in one iteration,

where E is some small value such as 10?3.

However in practice it’s difficult to choose this threshold value.

It has been proven that if learning rate α is sufficiently small,

then J(θ) will decrease on every iteration.

summarize

- If α is too small: slow convergence.

- If α is too large: may not decrease on every iteration and thus may not converge.

Features and Polynomial Regression

We can improve our features and the form of our hypothesis function in a couple different ways.

We can combine multiple features into one. For example, we can combine x1 and x2 into a new feature x3 by taking x1*x2

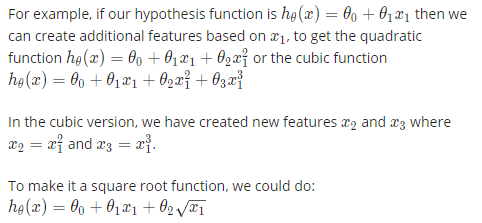

Polynomial Regression

Our hypothesis function need not be linear (a straight line) if that does not fit the data well.

We can change the behavior or curve of our hypothesis function by making it a quadratic, cubic

or square root function (or any other form).

One important thing : feature scaling

One important thing to keep in mind is, if you choose your features this way

then feature scaling becomes very important.

eg. if x1 has range 1-1000 then range of x1^2 becomes 1-1000000 and that of x1^3 becomes 1-1000000000

(2) Computing Parameters Analytically

Normal Equation

The normal equation formula :

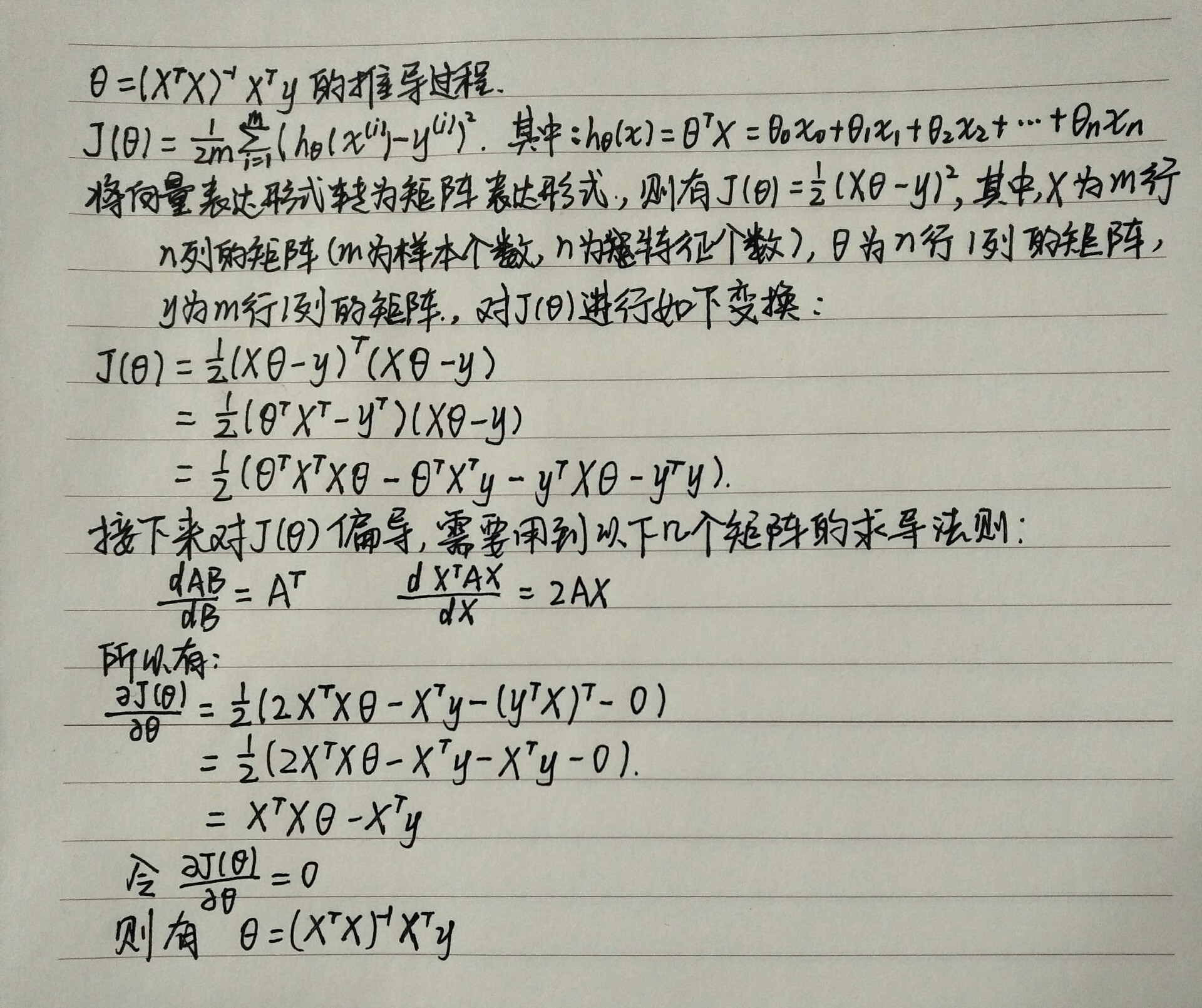

Formula derivation process

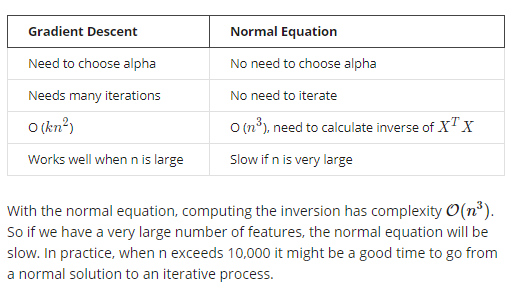

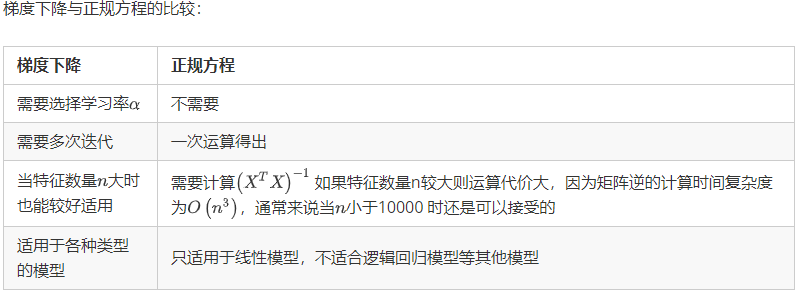

a comparison of gradient descent and the normal equation

And there is no need to do feature scaling with the normal equation

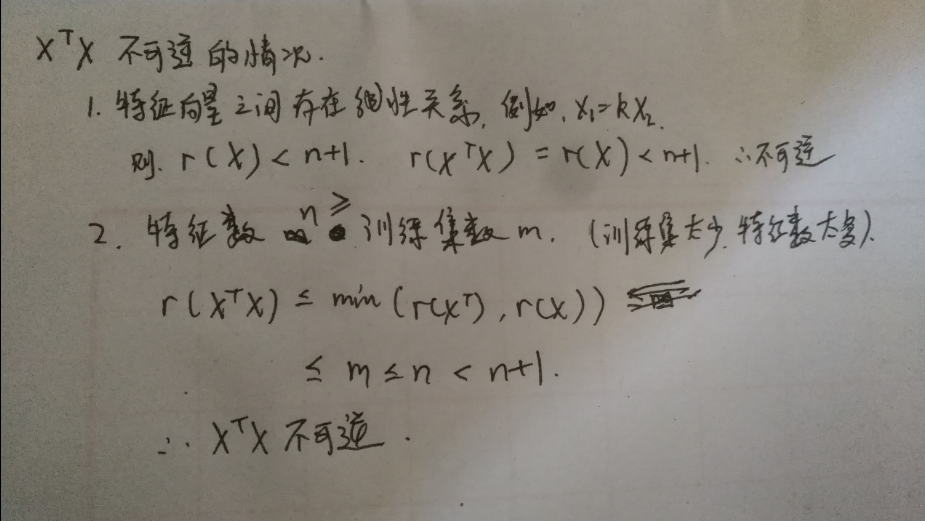

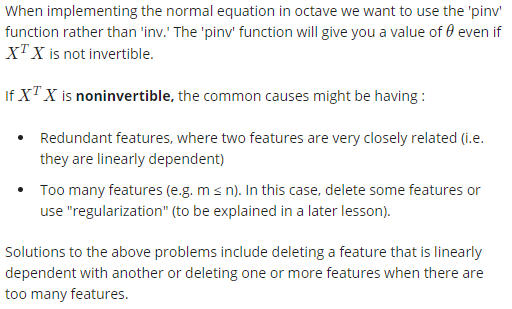

Normal Equation Noninvertibility

python:implement Normal Equation

#Using python to implement Normal Equation

import numpy as np

def normalEqn(X, y):

theta = np.linalg.inv(X.T@X)@X.T@y #X.T@X等價于X.T.dot(X)

return theta

智能推薦

Andrew Ng-深度學習-第一門課-week2

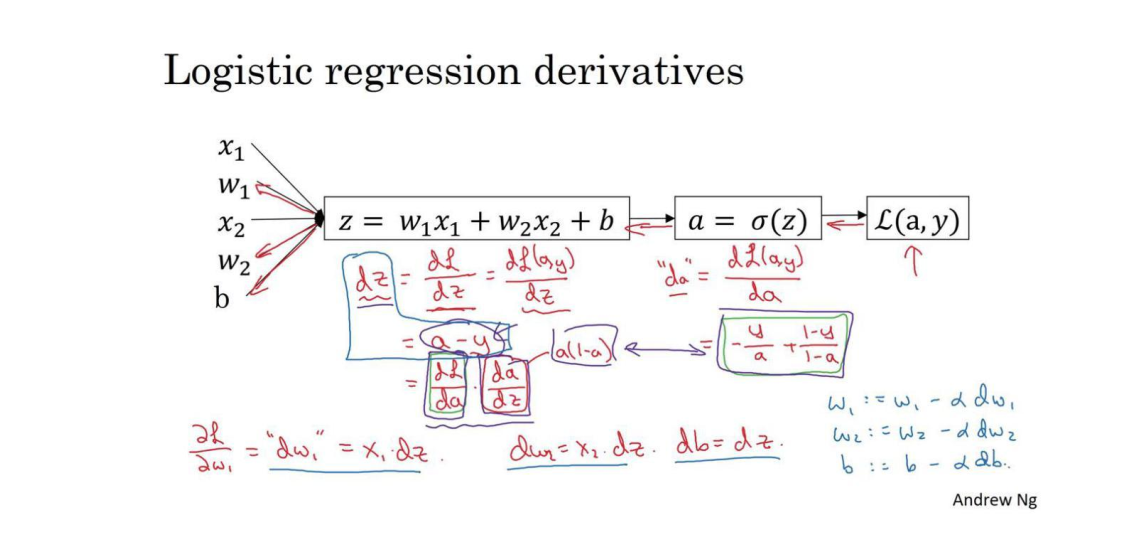

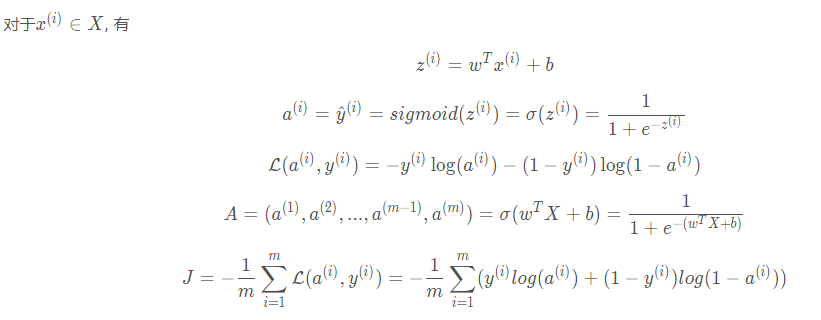

1.2.2 第一位代表第一門課,第二位代表第幾周,第三位代表第幾次視頻。編號和視頻順序對應,有些章節視頻內容較少進行了省略。對內容進行簡單的總結,而不是全面的記錄視頻的每一個細節,詳細可見[1]。 1.神經網絡和深度學習 1.2 Basics of Neural Network programming 1.2.1 Binary classification 符號定義 : xxx:表示一個nxn_x...

過度擬合-機器學習(machine learning)筆記(Andrew Ng)

過度擬合overfitting 什么是過度擬合 如何解決過擬合問題 正則化 正則化線性回歸 正則化邏輯回歸 過度擬合(overfitting) 什么是過度擬合 欠擬合:如果一個算法沒有很好的擬合數據,比如一個本應該用二次多項式擬合的數據用了線性去擬合,導致最后擬合數據的效果很差。我們稱之為欠擬合(underfitting)或者高偏差(high bias)。 過擬合:如果一個應該用二次多項式去擬合的...

Machine Learning(Andrew Ng)ex2.logistic regression

Exam1 Exam2 Admitted 0 34.623660 78.024693 0 1 30.286711 43.894998 0 2 35.847409 72.902198 0 3 60.182599 86.308552 1 4 79.032736 75.344376 1 Exam1 Exam2 Admitted 0 34.623660 78.024693 0 1 30.286711 43...

吳恩達深度學習筆記(一)week1~week2

吳恩達深度學習筆記(一) 筆記前言:距離開學過去也有兩個半月了,浮躁期也漸漸過去,兩個半月糊里糊涂的接觸了些機器學習/深度學習的知識,看了不少資料,回來過頭來看還是覺得看吳恩達老師的課受益最深,深入淺出,在此也強烈推薦每一個想進入機器學習領域又苦于無從入手的同學以該視頻課程作為入門。上周做了組內的第一次匯報便是講神經網絡,準備過程中發現之前自認為弄懂的知識點甚是模糊,然后又想想這幾個月的學習,大都...

Neural Networks and Deep Learning(week2)神經網絡的編程基礎 (Basics of Neural Network programming)...

總結 一、處理數據 1.1 向量化(vectorization) (height, width, 3) ===> 展開shape為(heigh*width*3, m)的向量 1.2 特征歸一化(Normalization) 一般數據,使用標準化(Standardlization), z(i) = (x(i) - mean) / delta,mean與delta代表X的均值和標準差,最終特征處...

猜你喜歡

freemarker + ItextRender 根據模板生成PDF文件

1. 制作模板 2. 獲取模板,并將所獲取的數據加載生成html文件 2. 生成PDF文件 其中由兩個地方需要注意,都是關于獲取文件路徑的問題,由于項目部署的時候是打包成jar包形式,所以在開發過程中時直接安照傳統的獲取方法沒有一點文件,但是當打包后部署,總是出錯。于是參考網上文章,先將文件讀出來到項目的臨時目錄下,然后再按正常方式加載該臨時文件; 還有一個問題至今沒有解決,就是關于生成PDF文件...

電腦空間不夠了?教你一個小秒招快速清理 Docker 占用的磁盤空間!

Docker 很占用空間,每當我們運行容器、拉取鏡像、部署應用、構建自己的鏡像時,我們的磁盤空間會被大量占用。 如果你也被這個問題所困擾,咱們就一起看一下 Docker 是如何使用磁盤空間的,以及如何回收。 docker 占用的空間可以通過下面的命令查看: TYPE 列出了docker 使用磁盤的 4 種類型: Images:所有鏡像占用的空間,包括拉取下來的鏡像,和本地構建的。 Con...

requests實現全自動PPT模板

http://www.1ppt.com/moban/ 可以免費的下載PPT模板,當然如果要人工一個個下,還是挺麻煩的,我們可以利用requests輕松下載 訪問這個主頁,我們可以看到下面的樣式 點每一個PPT模板的圖片,我們可以進入到詳細的信息頁面,翻到下面,我們可以看到對應的下載地址 點擊這個下載的按鈕,我們便可以下載對應的PPT壓縮包 那我們就開始做吧 首先,查看網頁的源代碼,我們可以看到每一...